If your query is forcing the db to search through millions of rows to fetch data, indexing a table can help improve performance. Here’s how to do it on Postgres.

CREATE INDEX tablename_columnname_anothercolumn_index ON tablename (columnname, anothercolumn)ELI5 Index

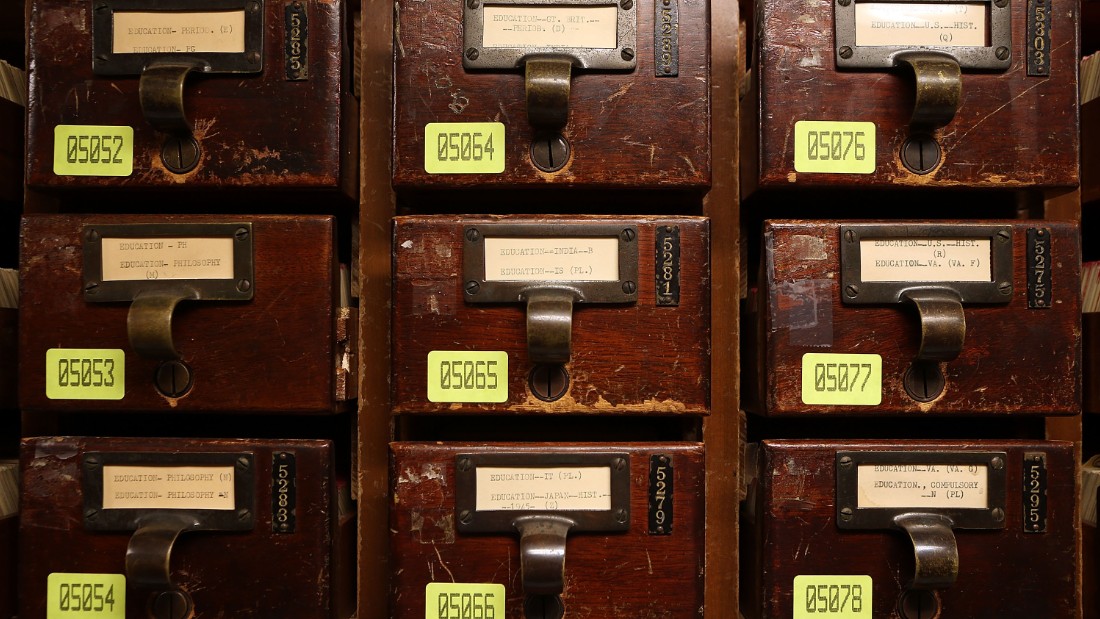

If you’re actually five you probably haven’t seen one of those indexes in wooden box that you saw at public libraries, but it behaves the same way. Instead of searching through each and every book (row), with the index you’ll know the exact place of a book. In db terms, you’ll search through fewer rows.

Caveats

- A DB server will cache indexes in memory so don’t create too many.

- Indexes will effect insertion performance negatively.

- Personally saw 2-300% performance gains for queries but mileage may vary.

Tip

Speaking of queries, this might sound obvious, but you want to index columns that you perform most of your queries on. So say you look through a date range and the model name most often. Those are the columns you’ll want to index.

Look Up Current Indexes

select *

from pg_indexes

where tablename not like 'pg%';Laravel

For Laravel users you can create indexes in your migrations

public function up()

{

Schema::create('tasks', function (Blueprint $table) {

$table->increments('id');

$table->integer('user_id')->index();

$table->string('name');

$table->timestamps();

});

}